AI Festivus: An AI Workshop for the Rest of Us

I recently read Kara Swisher’s Burn Book: A Tech Love Story. It’s juicy, witty, irreverent, and ominous. Check it out before a billionaire has it pulled from your local library.

The last chapter includes a quote from tech ethicist Tristan Harris. Harris notes how easily we can adopt overly optimistic or pessimistic views when humanity faces a disruptive threat on a global scale.

“The places that I think people are landing include what we call pre-tragic, which is when someone actually doesn't want to look at the tragedy—whether it's climate or some of the AI issues that are facing us or social media having downsides. We don't want to metabolize the tragedy, so we stay in naive optimism. A pre-tragic person believes, humanity always figures it out. Then there's the person who stares at the tragedy and gets stuck. In tragedy, you either get depressed or you become nihilistic.

There's a third place to go, which is what we call post-tragic, where you actually accept and grieve through some of the realities that we are facing. You have to go through the dark night of the soul and be with that so you can be with the actual dimensions of the problems that we're dealing with and you're honest about what it would take to do something about it.”

I’ve been a participant in and a presenter at a lot of AI events over the past two years, and I have yet to see one that encouraged attendees to process their feelings. Typically, the presenters do most of the talking, and it seems like someone asked them to cover the following in 60 minutes or less.

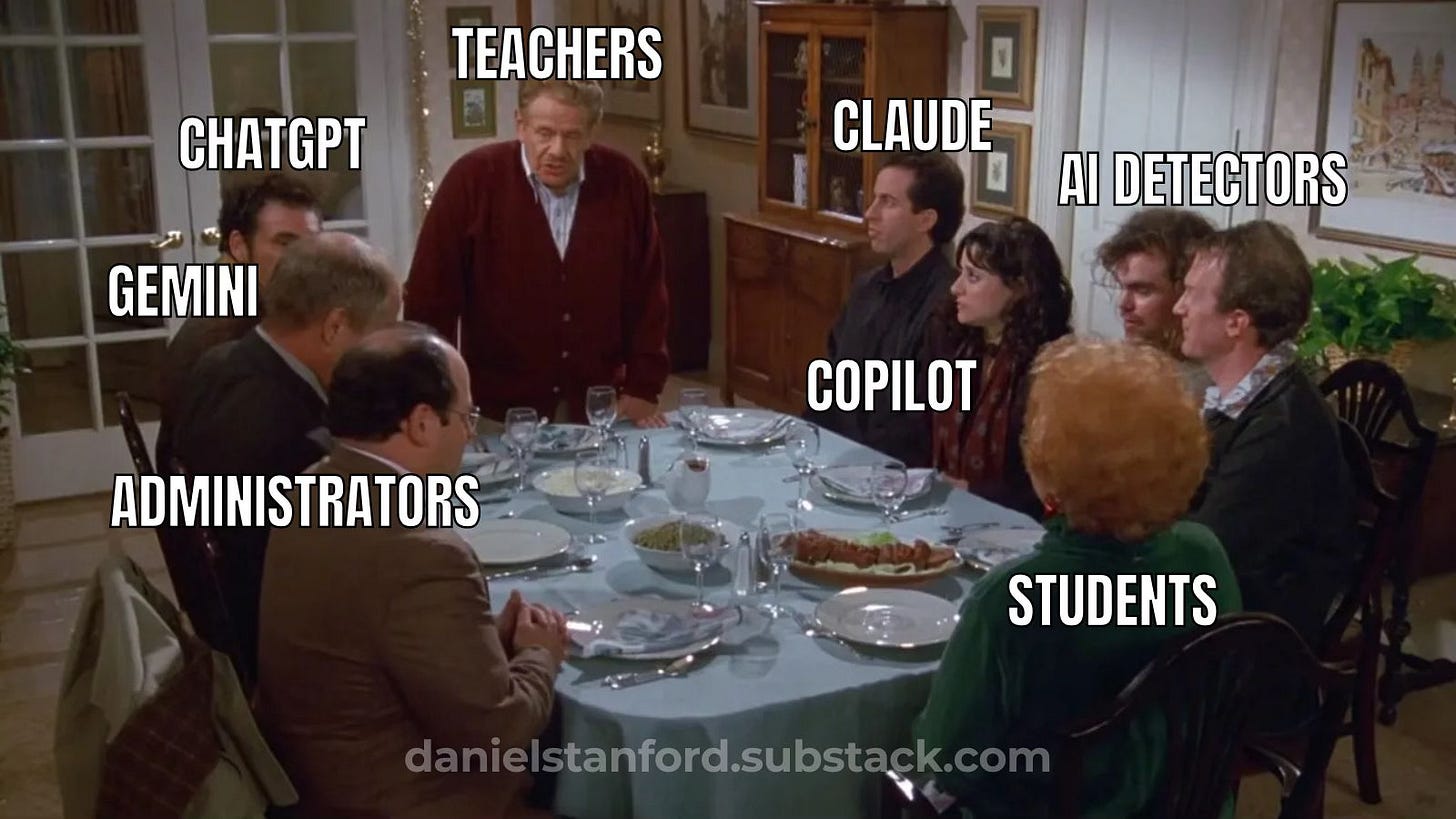

Discourage use of AI detectors in a way that doesn’t upset anyone currently using them.

Promote AI use in a way that wins over Luddites without boring early adopters. (Remind them AI is inevitable, but replace the word “inevitable” with a synonym people like.)

Acknowledge all the ethical concerns, but don’t be a downer. Stay solutions-oriented!

Provide universal policies for AI use. They should be specific enough to give all instructors peace of mind, but vague enough that no one feels too boxed in.

Give example assignments that incorporate AI. Make sure to include one that every instructor in every discipline will find relatable and valid.

My work as a consultant gives me a limited perspective, and it’s entirely possible these external-speaker presentations are being backed up with internal offerings that provide more space for grief and the airing of grievances—an AI Festivus, if you will. In my ideal world, these events would include discussion topics like these:

Purpose and Burnout

Is there anything about AI that has you questioning your teaching philosophy or your identity as a teacher?

Do you feel you’re teaching too many skills that are timely but not timeless? Is there anything you can do to shift that?

Did your score on the Maslach Burnout Inventory surprise you? Can you imagine what support you’d need or what changes you might make to begin to address this?

Assignments and Assessment

What’s something you’re glad you learned the “old-fashioned” way that you’re afraid future students might miss out on?

When do your students need to make something from scratch and when is it ok for them to take shortcuts? What’s an example of a “smart” shortcut you might encourage students to take in one of your assignments?

If you don’t want students to use AI in certain situations, how might you approach this without relying on AI detectors? (For examples of how AI detectors exacerbate inequity, see “AI Detection in Education is a Dead End” by Leon Furze.)

Are you currently assessing student work in a high-stakes way that might encourage unwanted AI use? Is there a small change you could make to focus more on process over product?

Some of this might seem a bit woo-woo for higher ed, but I think we ignore Tristan Harris at our peril on this one. We need space to feel our feelings and grieve if we want to make more balanced and thoughtful decisions about what comes next.

If there’s a question you’d love to see in your ideal “feelings-friendly” AI workshop or a grievance you’d like to air, feel free to share it in the comments.

Good stuff. I think Tristan Harris got pre-tragic, tragic, and post-tragic from integral philosopher of education Zak Stein, who is a true luminary and includes those three "stations" in his meta-psychology (unpublished).

Your point about timely skills vs timeless ones is rich for reflection.